Using GenAI for creating Pedagogical Resources/Materials in Education

Institution: University College Cork

Discipline: Education

Authors: João Costa, João Mota

GenAI tool(s) used: ChatGPT (3.5) and Microsoft Copilot (using GPT 4.0)

Situation / Context

This case study, from an accredited professional degree in Teacher Education, encompasses the design of a set of teaching and assessment resources carefully created and curated to support the delivery of modules across the BEd Physical Education, Sports Studies and Arts (BEDPESSA) degree, namely Pedagogical Foundations (ED1324) and a suite of 4 modules centred on Curriculum-Based Physical Activities (ED1314, ED1323, ED2405, ED2406). The BEDPESSA programme caters to a cohort of 200 future PE and Arts teachers, 50 per year and per module. All modules rest in a new programme structure that was reconfigured during the last accreditation round completed in 2022 and are centred around the implementation of curriculum and pedagogical models for post-primary education classrooms.

Task / Goal

A challenge we faced while delivering our modules was providing the students with rich, detailed, and authentic accounts/problems of teaching contexts that could be used to guide and scaffold learning and assessment for the students to connect theory and practice in relation to curriculum and pedagogical models in post-primary education. This challenge stemmed mainly from the amount of time necessary to craft and curate these problems until they were ready for application while meeting key pedagogical features of ecological validity and alignment. The use of GenAI provided us with the ability to automatise and standardise most of the crafting process, securing enough time to allow us to centre our efforts on refining the problems and guaranteeing their validity and alignment with the modules’ learning outcomes.

Actions / Implementation

As hinted above, we used Gen AI to develop a set of problems describing realistic educational contexts and the challenges stemming from the implementation of specific curriculum and instructional models (e.g., Flipped Classroom, Adventure Education).

Their use was twofold: 1) in the case of the Curriculum-Based Physical Activities these cases were used as hooks to set class discussion and drive the remainder of the class; while, 2) in the case of ED1324 the cases produced were used as assessment prompts that students had to analyse and discuss showing an understanding of the links between theory and practice of pedagogical models in the classroom.

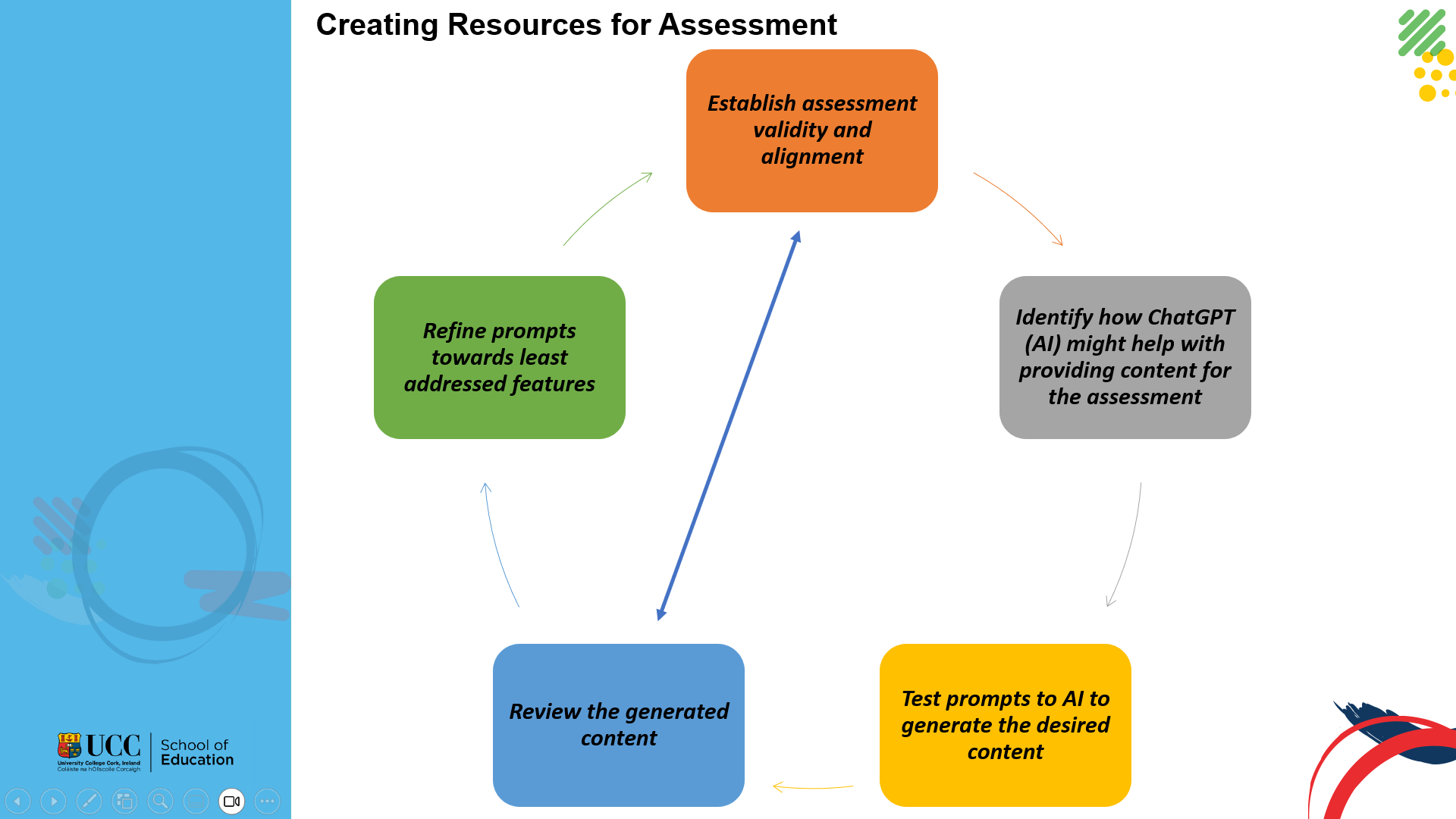

We prompted ChatGPT (free version using GPT 3.5) and Microsoft Copilot (using GPT 4.0) to design a problem or case scenario that included challenges, common errors, and/or key elements/principles of said models’ implementation. Based on reviewing and dissecting the outputs obtained, the prompts were refined towards the least addressed features of the scenarios and then refined manually to align with the particular learning intentions/outcomes of each class/module.

The rubrics were also refined to maximise alignment and validity of the learning and the assessment. The two relevant examples are further explained, considering the generated framework for creating resources using GenAI, presented here under Digital Resources.

For the use of creating learning experiences through scenarios as hooks for class discussion, once it was established the desired output aligned with the Learning Outcomes under the principles of alignment and validity, the following steps were taken:

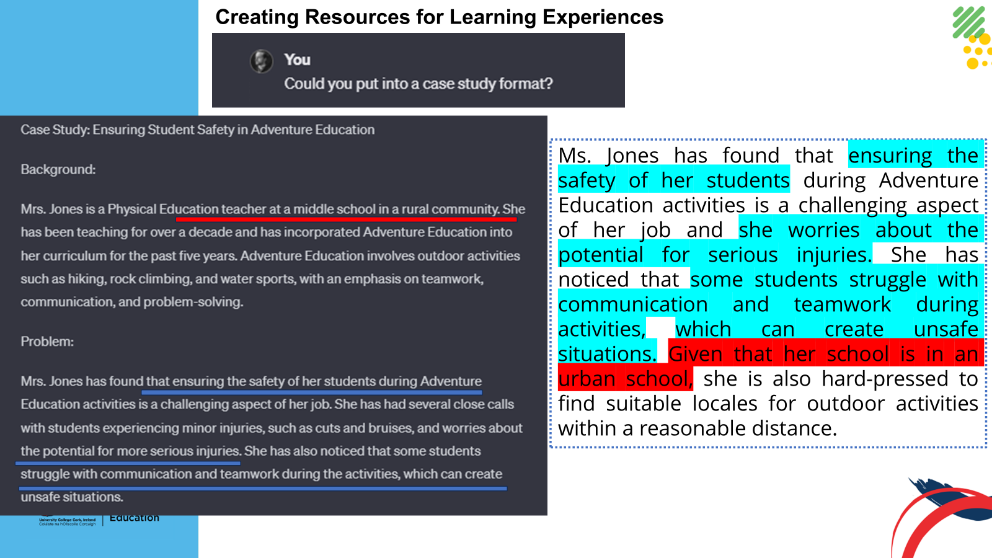

- prompting ChatGPT to write a problem with a specific pedagogical approach (e.g., Adventure Education) “Write me a problem experienced by PE teachers when using Adventure Education” and assessing the overall quality in terms of validity and authenticity;

- prompting ChatGPT to convert the problem to a case study format “Could you put into a case study format?” as it inherently adopts a clear structure with a Background section and a Problem section; and

- “manually” extracting the most relevant features towards condensing the case study text to the lecturer’s personal approach within the session’s aims (see sample figure below).

Fig 1. – Full output vs. condensed version of the resource for class discussion. Blue and red highlights identify the core elements used for condensing purposes.

This process was then replicated for the remaining pedagogical models, allowing students to use time and resources efficiently and effectively. To generate case studies as assessment prompts to be analysed by the students, a sample pedagogical model was used to develop and refine a master prompt structure that would then be filled by the remaining pedagogical models (for a total of five).

The first test prompt aimed at generating a case aligned to learning outcomes, namely a) outlining models of pedagogical practice and b) discussing examples of pedagogical practice: “Create a case scenario of teaching through case-based learning, making clear that it is a case-based teaching model, with one good feature and one negative feature”.

When assessing the output, it was noted that the generated scenario focused on a third-level setting, thus immediately revealing that the prompt had to specify the secondary-level setting to ensure authenticity and validity: “Refine the case scenario to focus on secondary school and expand the scenario.”

Lastly, the refined output still revealed an element in one of the features that was beyond the teacher’s control which didn’t suit the aim of the assessment relative to the Learning Outcomes, leading to a refinement prompt: “Refine the negative feature to focus on an element that Ms. Garcia could do better in the classroom when implementing the case-based teaching model”.

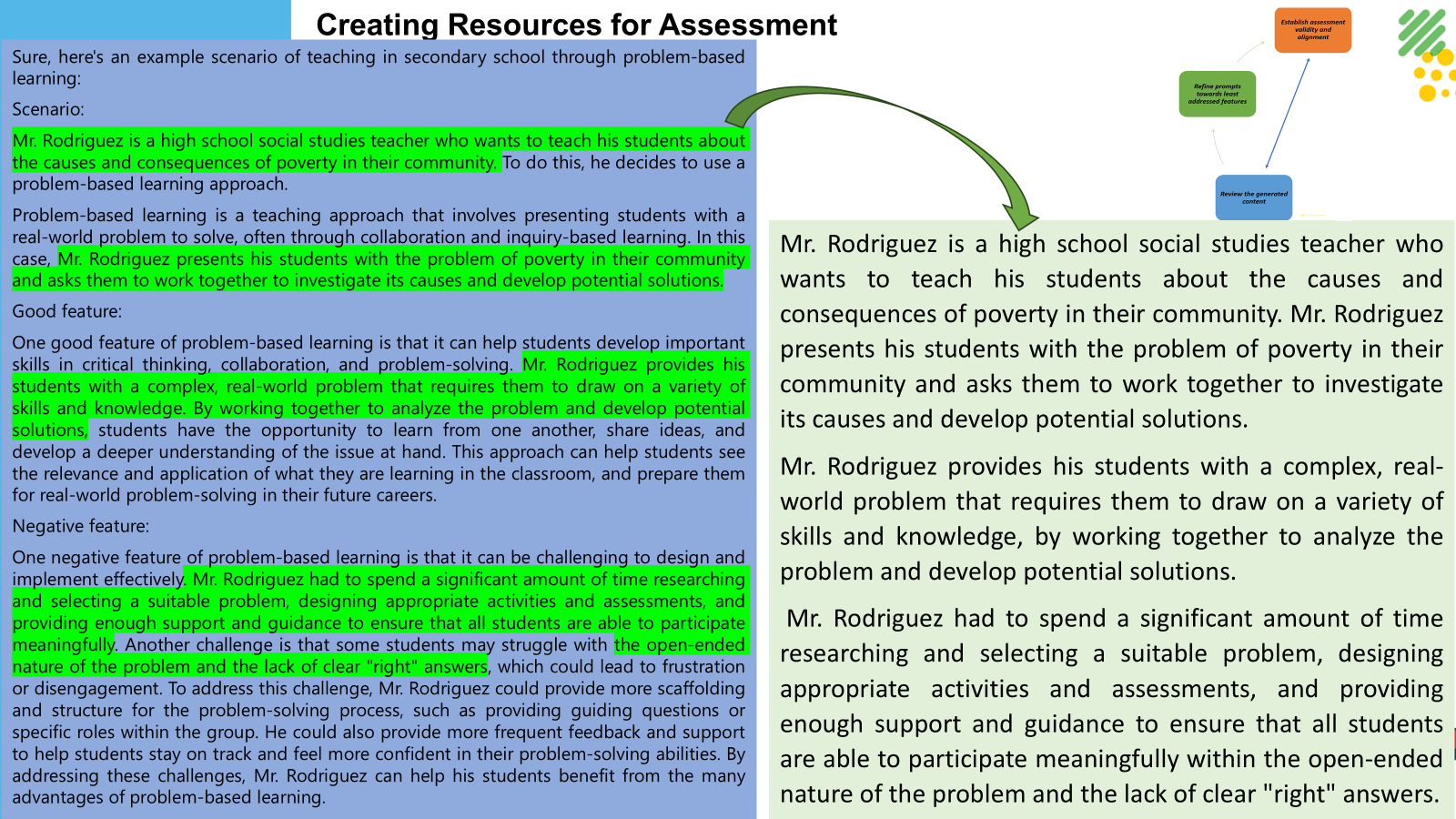

The final output showed sufficient validity and alignment to the intended output and a master prompt was finally tested now with a different pedagogical model aiming to avoid ChatGPT’s simply adopting the previous outputs and pushing the test forward: “Create a scenario of teaching in secondary school through problem-based learning, describing the problem-based teaching model, and referring to one good feature and one negative feature in the scenario”. Each of the generated cases were “manually” refined for enhanced cultural responsiveness, for example, by changing names to more common Irish names and educational settings, which eventually could have also been incorporated into the master prompt.

In less than one hour, all five cases were generated and condensed to the desired level that was consistent across all pedagogical models (e.g., between 100 and 150 words) and then incorporated into the assignment for students (see sample figure below).

Fig. 2 – Full output vs. condensed version of the resource for assignment (before cultural adaptation). Green highlights identify the core elements used for condensing purposes.

Outcomes

The outcomes from this practice refer to different elements:

- the actual problems and scenarios for each of the modules that hold ecological validity and alignment to the learning outcomes;

- a conceptual and practical framework that derives from the educational best practice of instructional alignment, embedding principles of using GenAI; and

- a set of pedagogical practices for learning and assessment that embeds the design of GenAI at its core, which can be used to continuously update and improve teaching, learning, and assessment.

The resources were evaluated with peers who reviewed the content and the process, as well as through student feedback on the modular experience. The framework has been evaluated through presenting and piloting with the School of Education during a professional development day.

Reflections

Given that most of this endeavour was driven by our initial curiosity around the prospective use of GenAI to lighten the load of preparing teaching and assessment resources while ensuring proper validity and alignment of said resources, we were positively surprised by the authenticity of the outputs produced with minimal tinkering, once a pattern of effective prompts was developed that then we could translate to a conceptual framework. This fact further deepened our conviction that GenAI can be meaningfully used to support the use of problem-based teaching and assessment practices and minimise the upfront time required to prepare resources; all the while maximizing the energy and time available for lecturers to focus on in-class interaction and provision of quality feedback.

For improvement next time, we are thinking of guiding students in adopting the framework as teachers during school placement. There, they can test the extent to which the assessment practices can be addressed through GenAI. As a result, they might identify how the experience can be improved and enhance its internal validity in assessing student learning.

Digital Resources

Fig. 3 Conceptual Framework for using GenAI to create assessment resources.

Additional Resource: Mota and Costa_AI in Education

Author Biographies

Dr João Costa is a Physical Education Teacher Educator, holding a Doctorate in Teacher Education from the University of Lisbon in Portugal. His Doctorate was awarded the first prize in Research in Sport Psychology and Pedagogy by the Portuguese Olympic Committee / Millenium BCP Sports Sciences Research Awards in 2017. He currently serves as a Lecturer in Education (Sport Pedagogy) in the School of Education at UCC, for the B.Ed. (Hons) of Physical Education, Sports Studies, and Arts where he takes the roles of Deputy Director and Interim School Placement Coordinator. His research interest focuses on Quality Physical Education, having Co-Coordinated the development of the European Physical Education Observatory for the European Physical Education Association, where he served as Representative for the South Region of Europe between 2018 and 2023. João is also serving NCCA as Chair of the Leaving Certificate Physical Education Subject Development Group and collaborates in the independent research network of the Global Observatory of Physical Education as a member of the Coordination Team and Research Team.

Dr João Mota is from Lisbon, Portugal. He has previously taught for five years at the Faculty of Human Kinetics, his alma mater. He has a Bachelor’s degree in Sports Science (minor in Exercise and Health), a Master’s degree in Physical Education and a PhD in Education. His research focuses on Physical Literacy, Pedagogy/Didactics of Physical Activities, Educational Assessment and Psychometrics. As part of his PhD, he led the creation and initial validation of the Portuguese Physical Literacy Assessment (PPLA). He has published several articles in international peer-reviewed journals and is an active peer reviewer. He has been involved in various international projects funded by the Erasmus+ program as a researcher-collaborator for the Centre for Education Studies and the UIDEF pole (FMH and IE-ULisboa). His sports background is in Dance, Swimming and Team Handball. When he is not working, he loves exploring the outdoors through mountaineering, hiking, and trail running. He has a strong passion for non-formal education, particularly Scouting—where he actively collaborates as a curriculum developer and adult educator.