Code CompAIre in Computer Sciences

Institution: Trinity College Dublin

Discipline: Computer Sciences

Authors: Jonathan Dukes, Béibhinn Nic Ruairí

GenAI tool(s) used: GitHub Copilot, and ChatGPT 3.5 turbo

Situation / Context

Students often only see their own work in response to a learning activity or assessment, and will eventually receive feedback when sufficient time and resources are available. Peer assessment and group activities can expose students to other responses and provide alternative sources of feedback, but these activities require substantial organisational effort.

Generative AI can produce multiple, diverse responses for the same activity or assessment, including responses with desired characteristics (e.g., verbose, concise, incomplete, flawed). This showcase describes an easily deployed activity where students work independently to compare AI-generated responses with their own responses and, while doing so, are invited to reflect on the quality of the AI responses and their own work.

A group of eight first-year Computer Science students in Trinity College Dublin trialled the comparison activity in March 2024. The context for the activity was a programming task using the Java programming language with which students were familiar. The study was not conducted in the context of any specific module.

Task / Goal

The primary goal was to design an activity that would encourage novice programmers to reflect on their own solution to a programming task and, more broadly, their competency with computer programming, through comparisons with alternative solutions. These alternative solutions should differ from each other with respect to qualities or features of interest (e.g., efficiency, complexity, completeness, use of specific programming paradigms).

The comparison activity was motivated and influenced by the work of Nicol (2021) on comparison processes and “internal feedback”, defined by Nicol as “new knowledge that students generate when they compare their current knowledge and competence against some reference information.”

A secondary goal of the activity was to expose novice programmers to the capabilities of generative AI coding assistants, such as GitHub Copilot, as well as their deficiencies.

Actions / Implementation

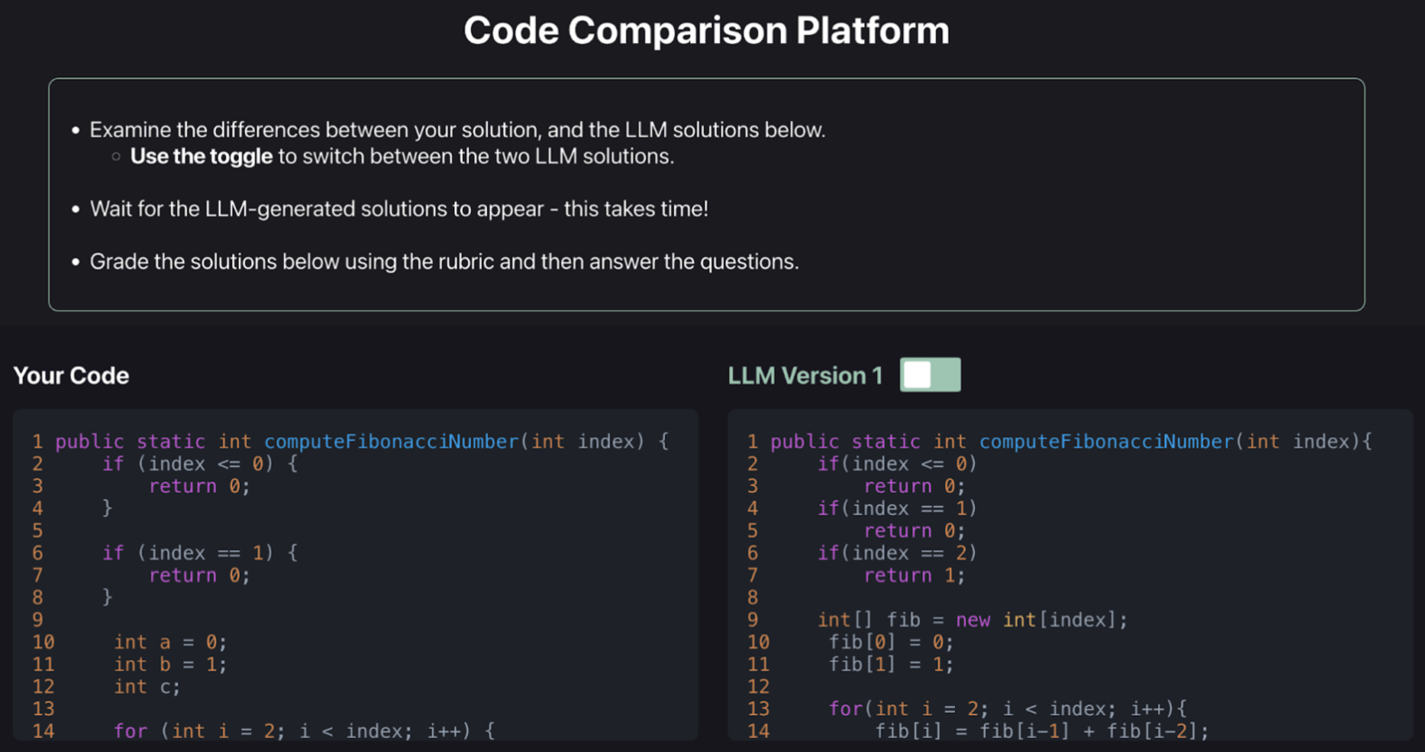

A prototype web-based tool to support the comparison activity was developed by a final-year Computer Science student. The comparison tool prompted students to formulate their own solution for a programming task and then used generative AI to create two alternative solutions, which were presented side-by-side with the student’s solution for easy comparison.

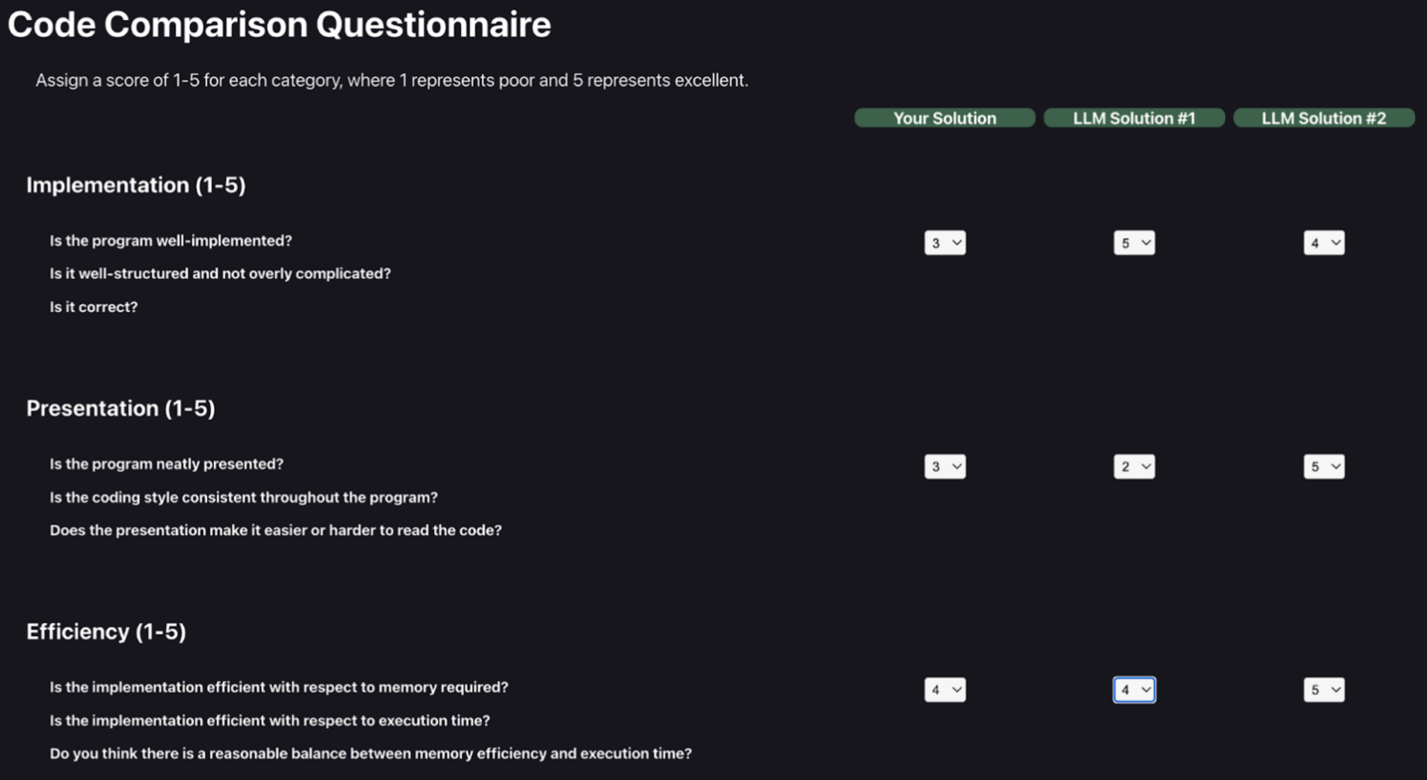

After comparing their own solution with the AI-generated solutions, students were asked to score their work against a rubric and write two short statements (2–3 lines each) describing (i) the differences between their own work and the alternative solutions and (ii) what they learned and (iii) what they might do differently given a similar task. These reflective statements have been identified as a critical step in comparison activities (Nicol, 2021).

The OpenAI GPT-3.5-Turbo Large Language Model (LLM) was used to dynamically generate alternative solutions. Prompt engineering was used to “encourage” the generation of diverse solutions with specific features. The generated solutions were tested for correctness so students would not see programs that did not function correctly (or did not function at all). Working solutions were then automatically evaluated with respect to qualitative measures (e.g., similarity, complexity) and two dissimilar solutions were selected and presented to the student through the web interface.

Outcomes

All students who participated in the trial either agreed (n=two) or strongly agreed (n=six) that comparing their own solutions with alternative solutions was a good exercise when learning how to program. When asked whether the differences between the two AI-generated solutions were useful for understanding alternative approaches to solving a problem, seven out of eight students agreed (n=six) or strongly agreed (n=one) with this. When asked whether they would make use of the comparison tool in an introductory programming course, five students either agreed (n=four) or strongly agreed (n=one).

Adopting the Generative AI comparison activity would require relatively minor effort on the part of educators. It could be adopted as a supplementary component of an existing activity or as a stand-alone activity. Even without the support of technology (e.g., the web-based tool described here), students could be given simple instructions, including sample prompts to manually generate responses using services such as ChatGPT, a template for scoring responses and prompts for reflective writing.

Reflections

GenAI is well-suited to comparison activities. In other circumstances, we might be concerned about the capacity of GenAI to produce flawed responses to a prompt but, for our comparison activity, we are actively encouraging such behaviour.

We have been encouraged by the responses of participants in our small-scale study and by the capacity of LLMs to produce responses with characteristics that we believe will be beneficial in comparison activities.

In this study, we did not analyse (or record) how students scored their own responses or the generated responses for the programming task. Nor did we record students’ reflective statements. Such an analysis should be considered in a larger-scale study.

This study has also only explored GenAI for comparison activities in the context of computer programming education. Analogous comparison activities should be explored in other disciplines.

Further Reading

Nicol, D. (2021). The power of internal feedback: exploiting natural comparison processes. Assessment & Evaluation in Higher Education, 46 (5), 756–778.

Digital Resources

Fig. 1: Prototype code comparison tool – comparing student’s solution with an AI-generated alternative solution. The student can switch between the two AI-generated alternative versions.

Fig. 2: Prototype code comparison tool – rubric used by students to score their own solution and the two AI-generated alternative versions.

Author Biographies

Béibhinn Nic Ruairí studied Computer Science at Trinity College Dublin, completing a capstone project in 2024 on the topic of “Inner Feedback for Novice Programmers through Comparisons with AI-Generated Solutions”.

Dr Jonathan Dukes is a lecturer in Computer Science at Trinity College Dublin. He is a past recipient of a Trinity Excellence in Teaching Award and is interested in applications of GenAI in computer science education and the effects of GenAI on learning and assessment of novice programmers.